understanding of the

future of AI

Our approach

Epoch AI is a multidisciplinary research institute investigating the trajectory of Artificial Intelligence (AI). We scrutinize the driving forces behind AI and forecast its ramifications on the economy and society.

We emphasize making our research accessible through our reports, models and visualizations to help ground the discussion of AI on a solid empirical footing. Our goal is to create a healthy scientific environment, where claims about AI are discussed with the rigor they merit.

Our research covers the following areas:

Trends in Machine Learning

We conduct in-depth analyses on compute, data, and investment trends to solidify our understanding of AI's trajectory.

Visit Trends pageEconomics of AI automation

We build models to understand the economic drivers and impacts of AI automation.

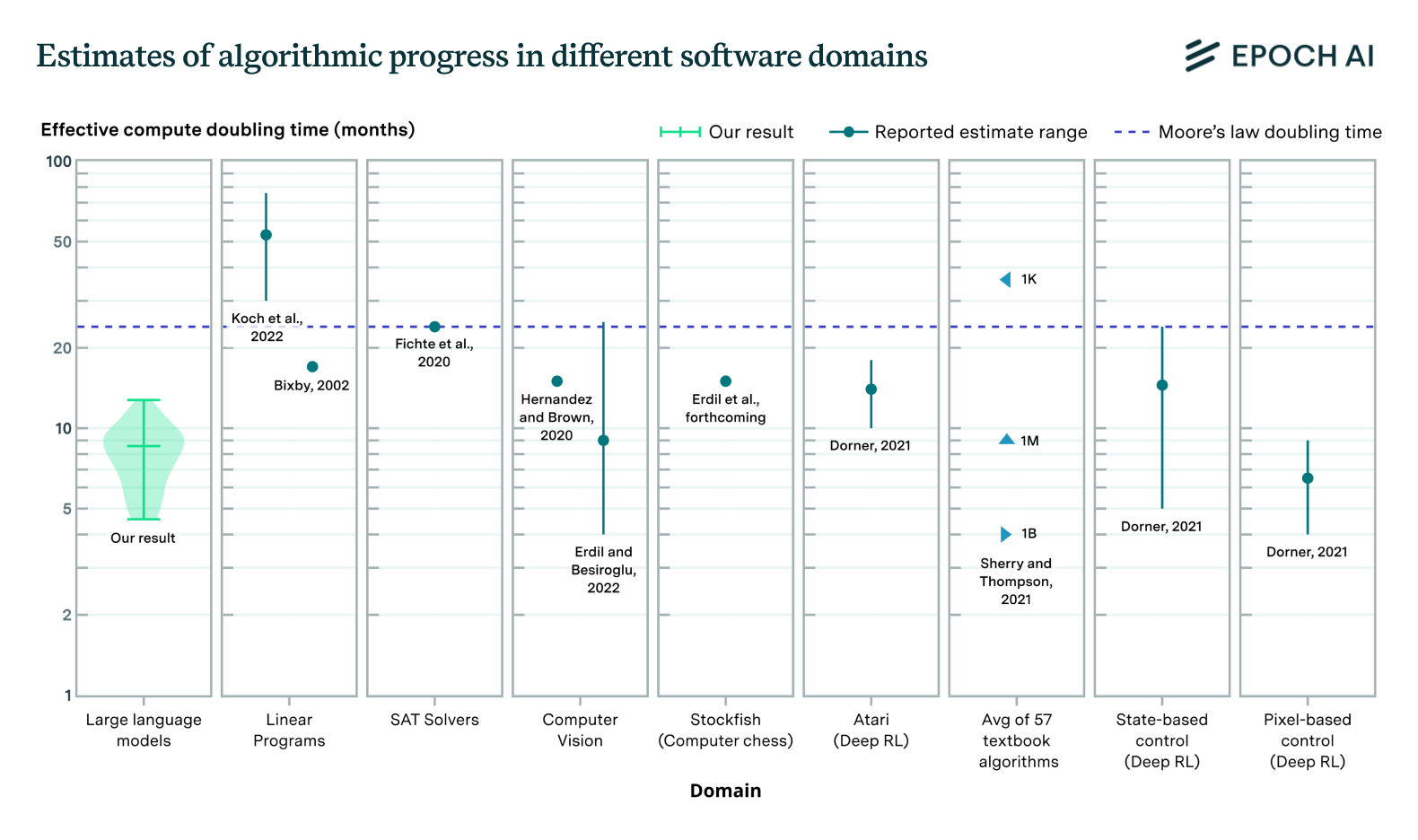

Open takeoff model playgroundAlgorithmic progress

We investigate how innovations in AI are allowing us to build more capable models with fewer resources.

See publicationsData in Machine Learning

We research the challenges and solutions related to data bottlenecks that AI labs may encounter.

See publicationsHighlighted research

Training Compute of Frontier AI Models Grows by 4-5x per Year

Our expanded AI model database shows that the compute used to train recent models grew 4-5x yearly from 2010 to May 2024. We find similar growth in frontier models, recent large language models, and models from leading companies.

Algorithmic Progress in Language Models

Progress in language model performance surpasses what we'd expect from merely increasing computing resources, occurring at a pace equivalent to doubling computational power every 5 to 14 months.

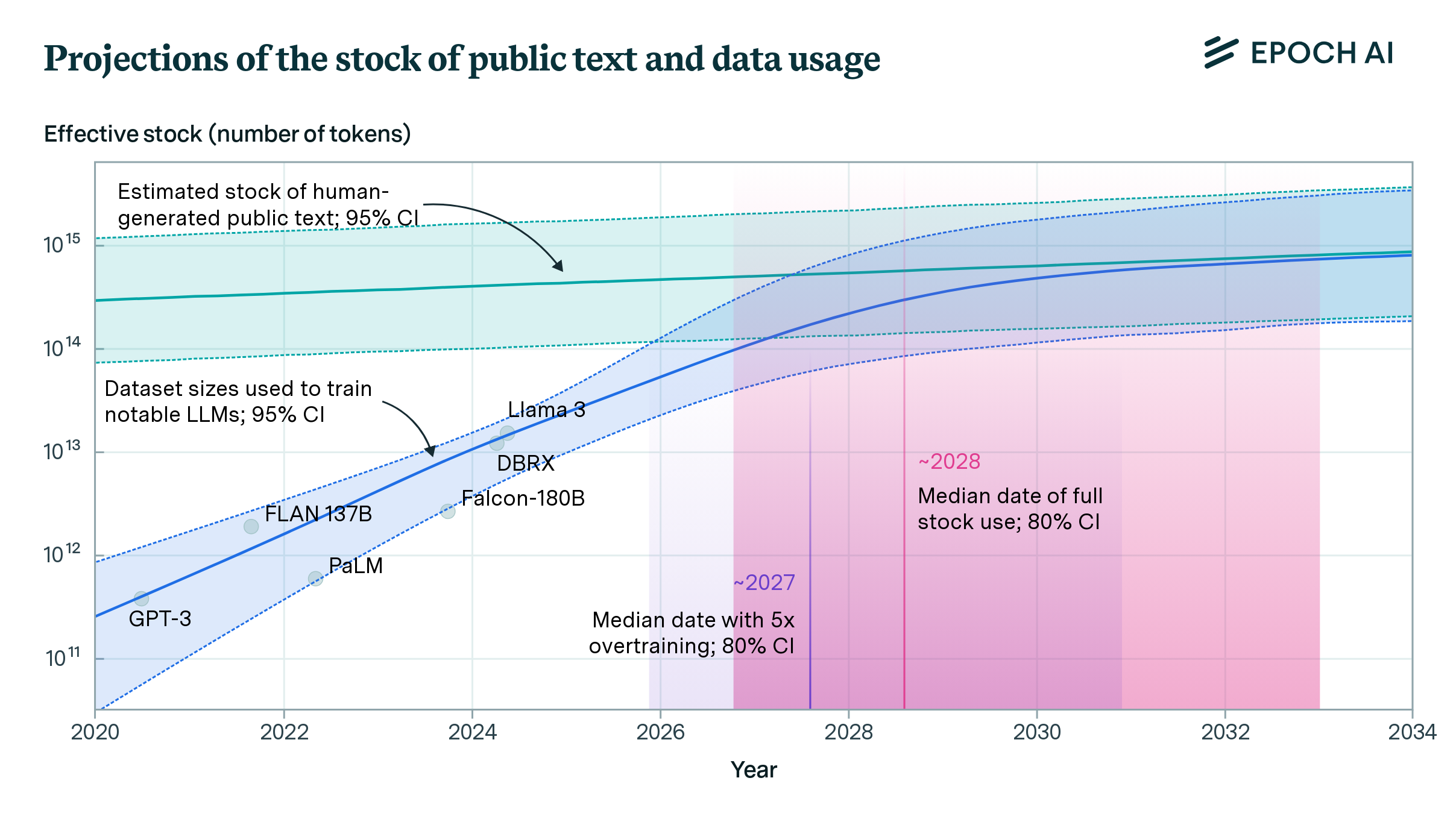

Will We Run Out of Data? Limits of LLM Scaling Based on Human-Generated Data

We estimate the stock of human-generated public text at around 300 trillion tokens. If trends continue, language models will fully utilize this stock between 2026 and 2032, or even earlier if intensely overtrained.