Compute Trends Across Three Eras of Machine Learning

We’ve compiled a dataset of the training compute for over 120 machine learning models, highlighting novel trends and insights into the development of AI since 1952, and what to expect going forward.

This paper was originally published on Feb 16, 2022. For the latest research and updates on this subject, please see: Training Compute of Frontier AI Models Grows by 4-5x per Year.

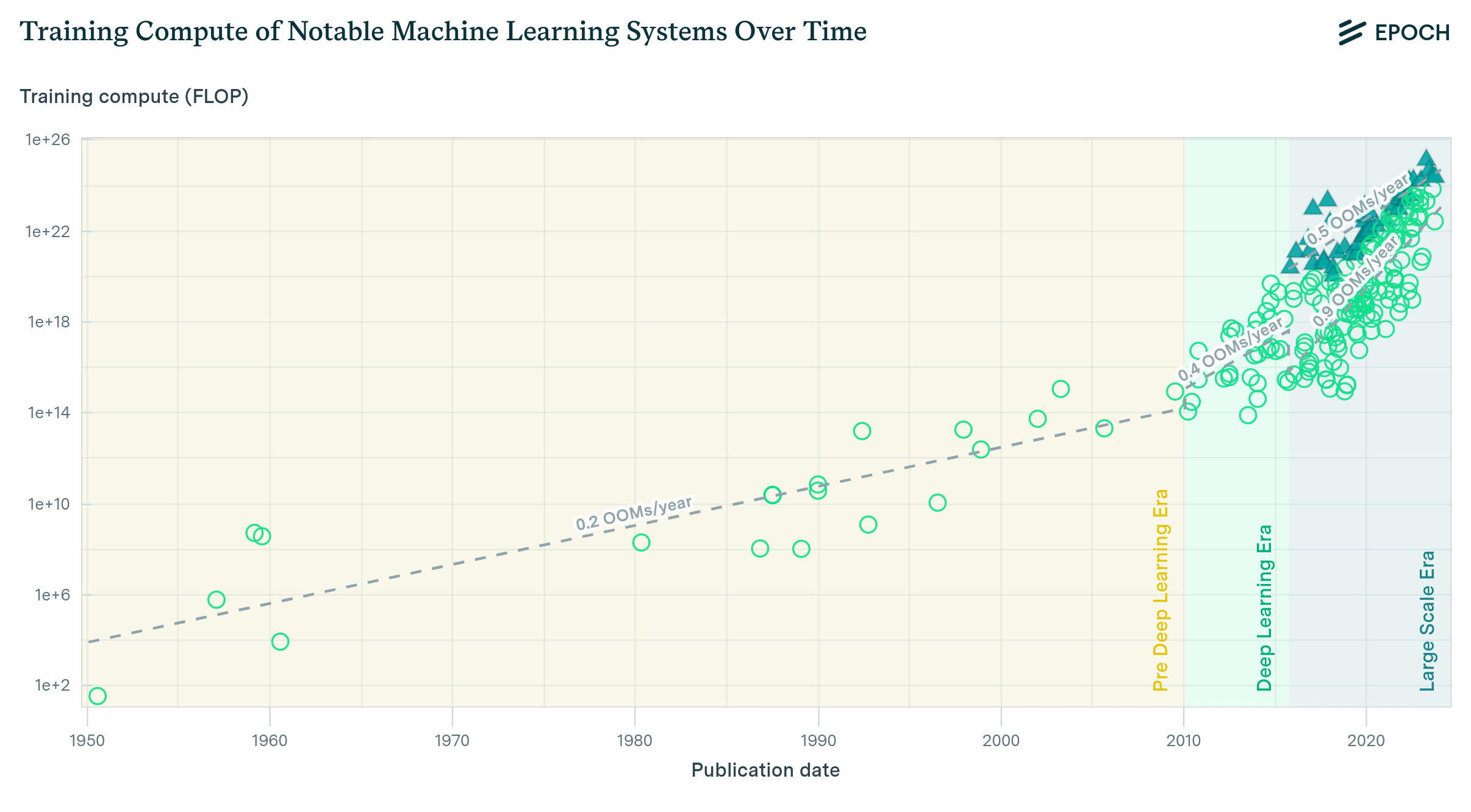

Summary: We have collected a dataset and analysed key trends in the training compute of machine learning models since 1950. We identify three major eras of training compute - the pre-Deep Learning Era, the Deep Learning Era, and the Large-Scale Era. Furthermore, we find that the training compute has grown by a factor of 10 billion since 2010, with a doubling rate of around 5-6 months. See our recent paper, Compute Trends Across Three Eras of Machine Learning, for more details.

Introduction

It is well known that progress in machine learning (ML) is driven by three primary factors - algorithms, data, and compute. This makes intuitive sense - the development of algorithms like backpropagation transformed the way that machine learning models were trained, leading to significantly improved efficiency compared to previous optimisation techniques (Goodfellow et al., 2016; Rumelhart et al., 1986). Data has been becoming increasingly available, particularly with the advent of “big data” in recent years. At the same time, progress in computing hardware has been rapid, with increasingly powerful and specialised AI hardware (Heim, 2021; Khan and Mann, 2020).

What is less obvious is the relative importance of these factors, and what this implies for the future of AI. Kaplan et al. (2020) studied these developments through the lens of scaling laws, identifying three key variables:

- Number of parameters of a machine learning model

- Training dataset size

- Compute required for the final training run of a machine learning model (henceforth referred to as training compute)

Trying to understand the relative importance of these is challenging because our theoretical understanding of them is insufficient - instead, we need to take large quantities of data and analyse the resulting trends. Previously, we looked at trends in parameter counts of ML models - in this paper, we try to understand how training compute has evolved over time.

Amodei and Hernandez (2018) laid the groundwork for this, finding a \(300,000\times\) increase in training compute from 2012 to 2018, doubling every 3.4 months. However, this investigation had only around \(15\) datapoints, and does not include some of the most impressive recent ML models like GPT-3 (Brown, 2020).

Motivated by these problems, we have curated the largest ever dataset containing the training compute of machine learning models, with over 120 datapoints. Using this data, we have drawn several novel insights into the significance of compute as an input to ML models.

These findings have implications for the future of AI development, and how governments should orient themselves to compute governance and a future with powerful AI systems.

Methodology

Following the approach taken by OpenAI (Amodei and Hernandez, 2018), we use two main approaches to determining the training compute1 of ML systems:

- Counting the number of operations: The training compute can be determined from the number of arithmetic operations that is performed by the machine. By looking at the model architecture and closely monitoring the training process, we can directly calculate the total number of multiplications and additions, yielding the training compute. As ML models become significantly more complex (as continues to be the case), this approach becomes significantly more tedious and tricky. Doing this also requires knowledge of key details of the training process2, which may not always be accessible.

- GPU-time: A second approach, which is independent of the model architecture, uses the information about the total training time and hardware to estimate the training compute. This method typically requires making several assumptions about the training process, which leads to a greater uncertainty in the final value.3

Most of the time, we were able to use either of the above approaches to estimate the training compute for a particular ML model. In practice this process involved many difficulties, since authors would often not publish key information about the hardware used or training time.

Of course, it would be infeasible for us to gather this data for all ML systems since 1950. Instead, we focus on milestone systems, based on the following criteria:

- Clear importance: These are systems that have major historical influence, significantly improve on the state-of-the-art, or have over 1000 citations4

- Relevance: We only include papers which include experimental results and a key machine learning component, and have a goal of pushing the existing state-of-the-art

- Uniqueness: If another paper describing the same system is more influential, then the paper is excluded from our dataset

This selection process lets us focus on the most important systems, helping us understand the key drivers of the state-of-the-art.

Results

Using these techniques, we yielded a dataset with training compute for over 120 milestone ML systems, the largest such dataset yet. We have chosen to make this and our interactive data visualisation publicly available, in order to facilitate further research along the same lines.

This visualization is updated as we collect further information on notable ML systems. As a result, the showcased trends differ from the time of our original publication.

The interactive visualization originally featured in this article now has its own dedicated page for a more comprehensive experience.

When analysing the gathered data, we draw two main conclusions.

- Trends in training compute are slower than previously reported

- We identify three eras of training compute usage across machine learning

Compute trends are slower than previously reported

In the previous investigation by Amodei and Hernandez (2018), the authors found that the training compute used was growing extremely rapidly - doubling every 3.4 months. With approximately 10 times more data than the original study, we find a doubling time closer to 6 months. This is still extraordinarily fast! Since 2010, the amount of training compute for machine learning models has grown by a factor of 10 billion, significantly exceeding a naive extrapolation of Moore’s Law.

This suggests that many previous analyses based on OpenAI’s paper were biased towards rapid developments, approximately by a factor of two.

Three eras of machine learning

One of the more speculative contributions of our paper is that we argue for the presence of three eras of machine learning. This is in contrast to prior work, which identifies two trends separated by the start of the Deep Learning revolution (Amodei and Hernandez, 2018). Instead, we split the history of ML compute into three eras:

- The Pre-Deep Learning Era: Prior to Deep Learning, training compute approximately follows Moore’s Law, with a doubling time of approximately every 20 months.

- The Deep Learning Era: This starts somewhere between 2010 and 20125, and displays a doubling time of approximately 6 months.

- The Large-Scale Era: Arguably, a separate trend of of models breaks off the main trend between 2015 and 2016. These systems are characteristic in that they are run by large corporations, and use training compute 2-3 orders of magnitude larger than systems that follow the Deep Learning Era trend in the same year. Interestingly, the growth of compute in these Large-Scale models seems slower, with a doubling time of about 10 months.

A key benefit of this framing is that it helps make sense of developments over the last two decades of ML research. Deep Learning marked a major paradigm shift in ML, with an increased focus on training larger models, using larger datasets, and using more compute. The bifurcation of the Deep Learning trend coincides with the shift in focus towards major projects at large corporations, such as DeepMind and OpenAI.

However, there is a fair bit of ambiguity with this framing. For instance, how do we know exactly which models can be considered large-scale? How can we be sure that this “large-scale” trend isn’t just due to noise? To test these questions, we used different statistical thresholds for what counts as “large-scale”, and the resulting trend does not change very much, thus the findings are at least somewhat robust to different selection criteria. Of course, the exact threshold that we use is still debatable, and it is hard to be certain about the observed trends without more data.

Implications and further work

We expect that future work will build upon this research project. Using the aforementioned compute estimation techniques, more training compute data can be gathered, offering the potential for more conclusive analyses. We can also make the data gathering process easier, such as by:

- Developing tools for automatically measuring training compute usage (as well as inference compute)

- Publishing key details about the training process, such as the GPU model used

Taking these steps helps key actors obtain valuable information in the future.

Naturally, we will also be looking at trends in dataset sizes, and comparing the relative importance of data and compute for increased performance. We can also look how factors like funding and talent influence the primary inputs of a ML system, like data and compute.

Answering questions like these is crucial for understanding how the future of AI will look like. At Epoch AI, we’re particularly concerned about ensuring that AI is developed in a beneficial way, with appropriate governance intervention to ensure safety. Better understanding the progress of compute capabilities can help us better navigate a future with powerful AI systems.

Read the full paper now on the arXiv.

-

Specifically, we focus on the final training run of a ML system. This is primarily due to measurability - researchers generally do not mention the total compute or training time that does not directly contribute to the final machine learning model. We simply do not have sufficient information to determine the total compute through the entire experimentation process. ↩

-

i.e. the total number of iterations during training. ↩

-

For instance, we often assumed that the GPU utilisation rate was 10%. We often also had to guess which GPU model was in use based on the year in which the paper was published, in the event that this information was not disclosed in the paper of interest and the authors were not able to provide an answer. ↩

-

These criteria are ambiguous and can vary on a case-by-case basis. For instance, new papers (published within the last year or two) can be very influential without having had the time to gather many citations. In such cases we make relatively subjective decisions of the importance of these ML models. ↩

-

We discuss this more in Appendix D of the paper. While AlexNet (Krizhevsky et al., 2012) is commonly associated with the start of Deep Learning, we argue that models before AlexNet have key features commonly associated with Deep Learning, and that 2010 is most consistent with evidence. ↩