Can AI Scaling Continue Through 2030?

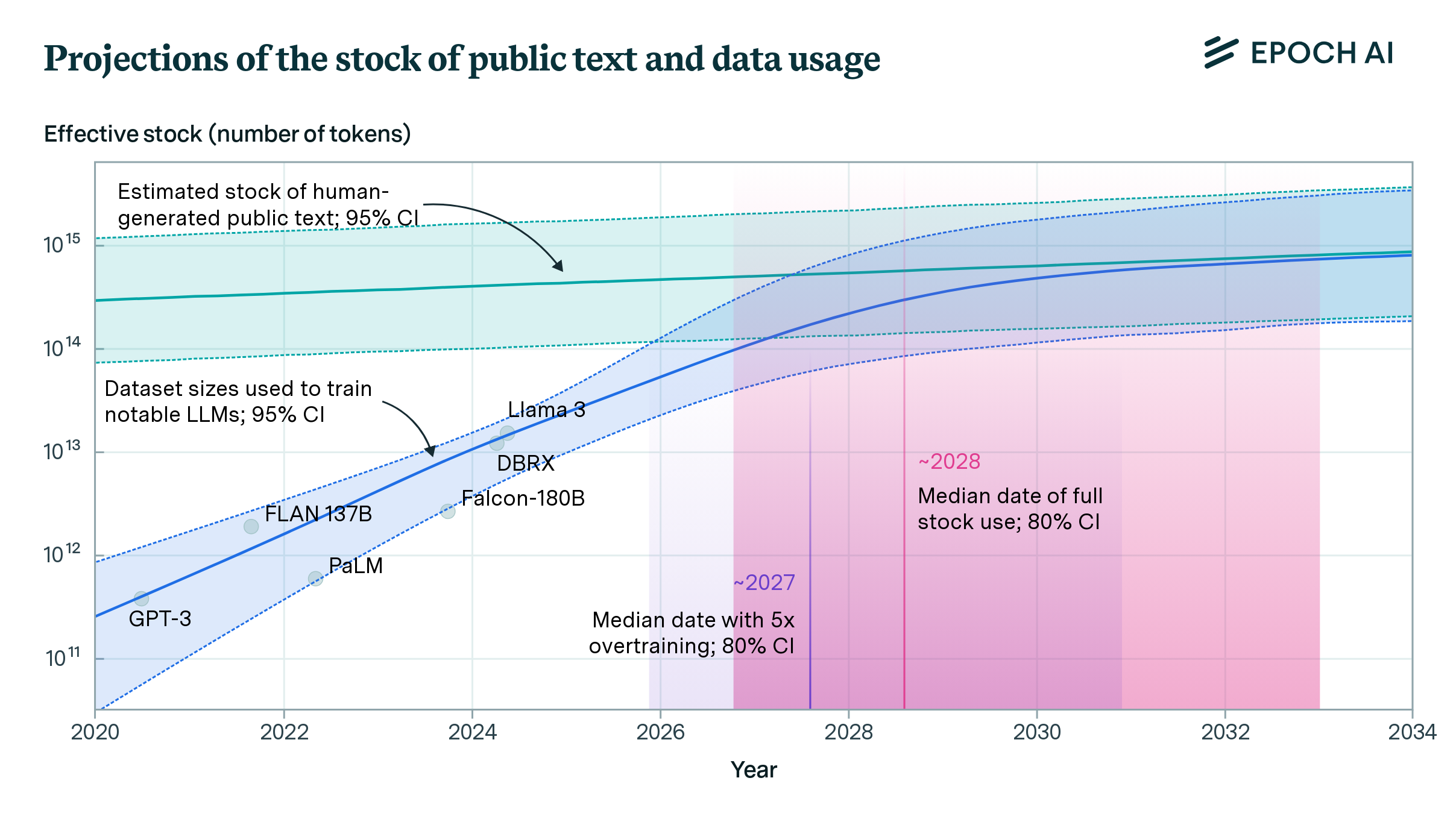

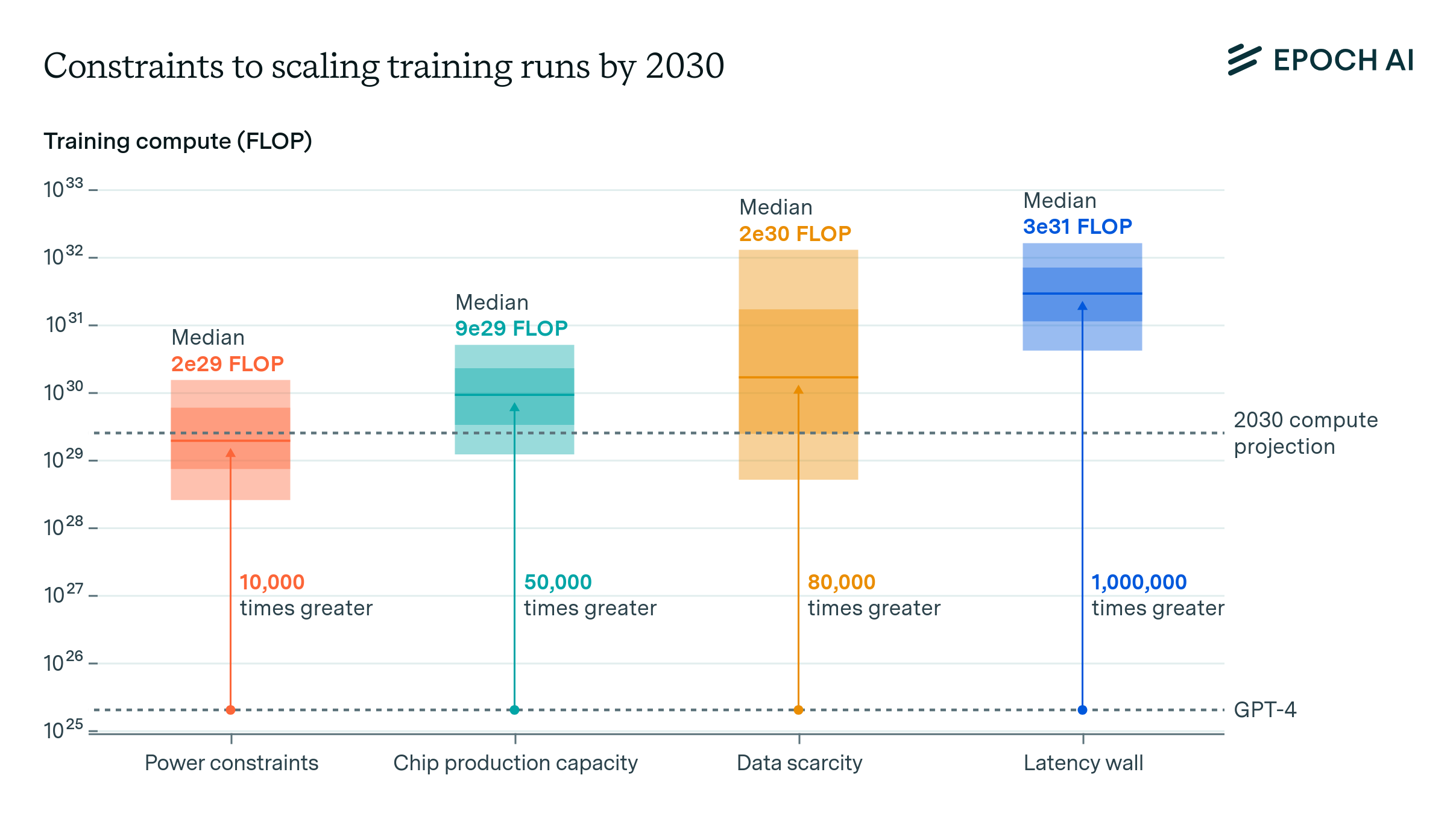

We investigate the scalability of AI training runs. We identify electric power, chip manufacturing, data and latency as constraints. We conclude that 2e29 FLOP training runs will likely be feasible by 2030.

Published

Resources

Introduction

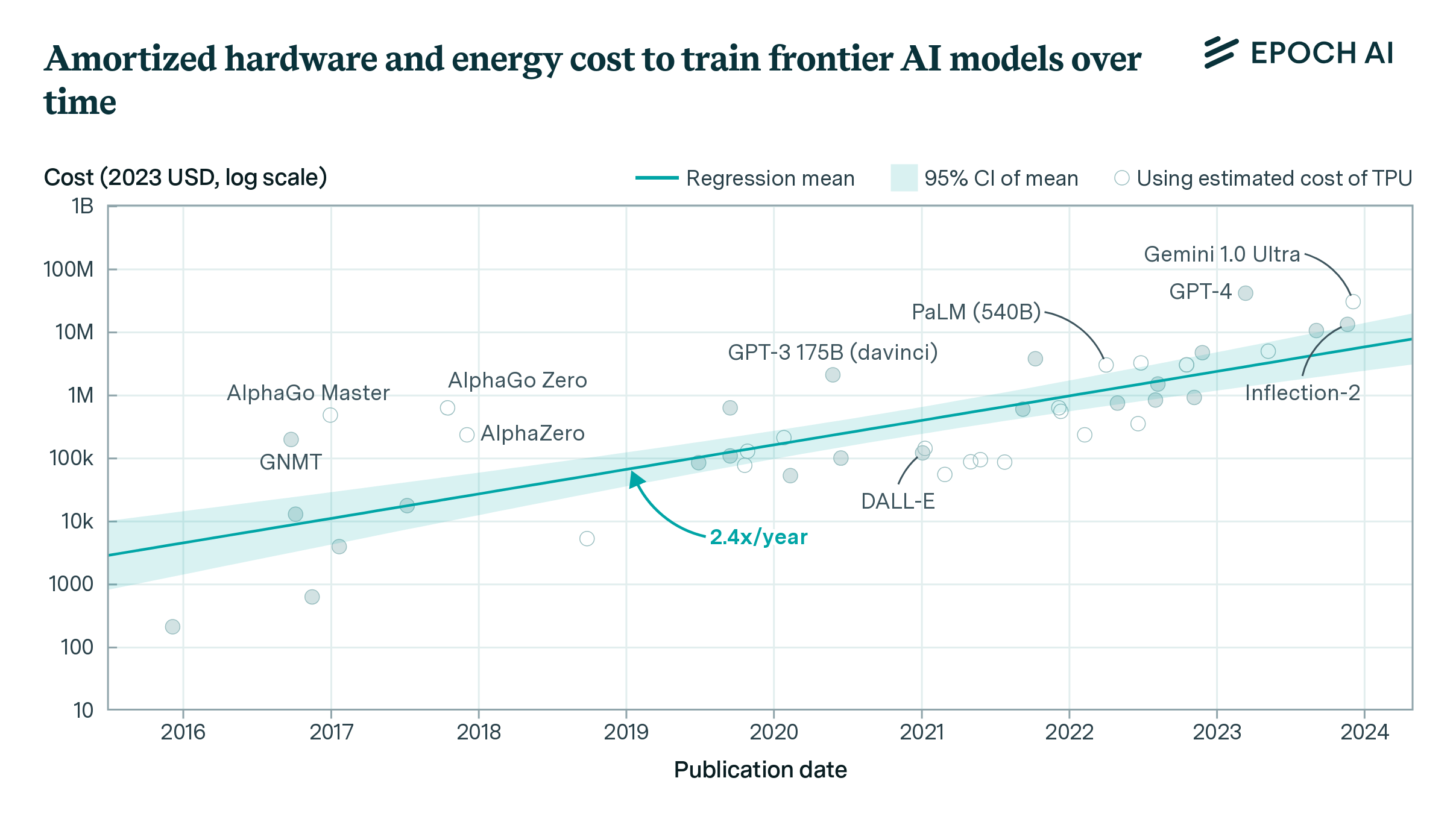

In recent years, the capabilities of AI models have significantly improved. Our research suggests that this growth in computational resources accounts for a significant portion of AI performance improvements.1 The consistent and predictable improvements from scaling have led AI labs to aggressively expand the scale of training, with training compute expanding at a rate of approximately 4x per year.

To put this 4x annual growth in AI training compute into perspective, it outpaces even some of the fastest technological expansions in recent history. It surpasses the peak growth rates of mobile phone adoption (2x/year, 1980-1987), solar energy capacity installation (1.5x/year, 2001-2010), and human genome sequencing (3.3x/year, 2008-2015).

Here, we examine whether it is technically feasible for the current rapid pace of AI training scaling—approximately 4x per year—to continue through 2030. We investigate four key factors that might constrain scaling: power availability, chip manufacturing capacity, data scarcity, and the “latency wall”, a fundamental speed limit imposed by unavoidable delays in AI training computations.

Our analysis incorporates the expansion of production capabilities, investment, and technological advancements. This includes, among other factors, examining planned growth in advanced chip packaging facilities, construction of additional power plants, and the geographic spread of data centers to leverage multiple power networks. To account for these changes, we incorporate projections from various public sources: semiconductor foundries’ planned expansions, electricity providers’ capacity growth forecasts, other relevant industry data, and our own research.

We find that training runs of 2e29 FLOP will likely be feasible by the end of this decade. In other words, by 2030 it will be very likely possible to train models that exceed GPT-4 in scale to the same degree that GPT-4 exceeds GPT-2 in scale.2 If pursued, we might see by the end of the decade advances in AI as drastic as the difference between the rudimentary text generation of GPT-2 in 2019 and the sophisticated problem-solving abilities of GPT-4 in 2023.

Whether AI developers will actually pursue this level of scaling depends on their willingness to invest hundreds of billions of dollars in AI expansion over the coming years. While we briefly discuss the economics of AI investment later, a thorough analysis of investment decisions is beyond the scope of this report.

For each bottleneck we offer a conservative estimate of the relevant supply and the largest training run they would allow.3 Throughout our analysis, we assume that training runs could last between two to nine months, reflecting the trend towards longer durations. We also assume that when distributing AI data center power for distributed training and chips companies will only be able to muster about 10% to 40% of the existing supply.4

Power constraints. Plans for data center campuses of 1 to 5 GW by 2030 have already been discussed, which would support training runs ranging from 1e28 to 3e29 FLOP (for reference, GPT-4 was likely around 2e25 FLOP). Geographically distributed training could tap into multiple regions’ energy infrastructure to scale further. Given current projections of US data center expansion, a US distributed network could likely accommodate 2 to 45 GW, which assuming sufficient inter-data center bandwidth would support training runs from 2e28 to 2e30 FLOP. Beyond this, an actor willing to pay the costs of new power stations could access significantly more power, if planning 3 to 5 years in advance.

Chip manufacturing capacity. AI chips provide the compute necessary for training large AI models. Currently, expansion is constrained by advanced packaging and high-bandwidth memory production capacity. However, given the scale-ups planned by manufacturers, as well as hardware efficiency improvements, there is likely to be enough capacity for 100M H100-equivalent GPUs to be dedicated to training to power a 9e29 FLOP training run, even after accounting for the fact that GPUs will be split between multiple AI labs, and in part dedicated to serving models. However, this projection carries significant uncertainty, with our estimates ranging from 20 million to 400 million H100 equivalents, corresponding to 1e29 to 5e30 FLOP (5,000 to 300,000 times larger than GPT-4).

Data scarcity. Training large AI models requires correspondingly large datasets. The indexed web contains about 500T words of unique text, and is projected to increase by 50% by 2030. Multimodal learning from image, video and audio data will likely moderately contribute to scaling, plausibly tripling the data available for training. After accounting for uncertainties on data quality, availability, multiple epochs, and multimodal tokenizer efficiency, we estimate the equivalent of 400 trillion to 20 quadrillion tokens available for training by 2030, allowing for 6e28 to 2e32 FLOP training runs. We speculate that synthetic data generation from AI models could increase this substantially.

Latency wall. The latency wall represents a sort of “speed limit” stemming from the minimum time required for forward and backward passes. As models scale, they require more sequential operations to train. Increasing the number of training tokens processed in parallel (the ‘batch size’) can amortize these latencies, but this approach has a limit. Beyond a ‘critical batch size’, further increases in batch size yield diminishing returns in training efficiency, and training larger models requires processing more batches sequentially. This sets an upper bound on training FLOP within a specific timeframe. We estimate that cumulative latency on modern GPU setups would cap training runs at 3e30 to 1e32 FLOP. Surpassing this scale would require alternative network topologies, reduced communication latencies, or more aggressive batch size scaling than currently feasible.

Bottom line. While there is substantial uncertainty about the precise scales of training that are technically feasible, our analysis suggests that training runs of around 2e29 FLOP are likely possible by 2030. This represents a significant increase in scale over current models, similar to the size difference between GPT-2 and GPT-4. The constraint likely to bind first is power, followed by the capacity to manufacture enough chips. Scaling beyond would require vastly expanded energy infrastructure and the construction of new power plants, high-bandwidth networking to connect geographically distributed data centers, and a significant expansion in chip production capacity.

What constrains AI scaling this decade

Power constraints

In this analysis, we project the power requirements necessary to sustain the current trajectory of scaling AI training. We then explore potential strategies to meet these power demands, including on-site power generation, local grid supply, and geographically distributed training networks. Our focus is on AI training runs conducted within the United States, examining the feasibility and constraints of each approach.5

Data center campuses between 1 to 5 gigawatt (GW) are likely possible by 2030. This range spans from Amazon’s 960 MW nuclear power contract in Pennsylvania to the announced 5 GW OpenAI/Microsoft campus. Such campuses would support AI training runs ranging from 1e28 to 3e29 FLOP, given expected advancements in the energy efficiency of ML GPUs.

Scaling beyond single-campus data centers would involve geographically distributed training, which could utilize energy infrastructure across multiple regions. Given current projections, a distributed training network could accommodate a demand of 2 to 45 GW, allowing for training runs of 2e28 to 2e30 FLOP. Bandwidth could also constrain the largest training run that could be done in such a network. Concretely, inter-data center bandwidths of 4 to 20 Petabits per second (Ppbs), which are on trend for existing data centers, would support training runs of 3e29 to 2e31 FLOP. This is likely high enough that bandwidth would not be a major obstacle compared to securing the power supply.6

Larger training runs are plausible: we expect the cost of the infrastructure needed to power GPUs during a training run to be around 40% of the cost of the GPUs themselves by 2030, and rapid expansion of the power supply via natural gas or solar power could be arranged within three to five years of a decision to expand—though this could be constrained by infrastructure-level bottlenecks.

The current trend of AI power demand

AI model training currently consumes a small but rapidly growing portion of total data center power usage. Here, we survey existing estimates of current demand, extrapolates future trends, and compares these projections to overall data center and national power capacity.

Large-scale AI training relies primarily on hardware accelerators, specifically GPUs. The current state-of-the-art GPU is Nvidia’s H100,7 which has a thermal design power (TDP) of 700W. After accounting for supporting hardware such as cluster interconnect and CPUs, and data center-level overhead such as cooling and power distribution, its peak power demand goes up to 1,700W per GPU.8

Using the power demand per GPU, we can estimate the installed power demand for frontier models. The recent Llama 3.1 405B model, with its 4e25 FLOP training run, used a cluster of 16,000 H100 GPUs. This configuration required 27MW of total installed capacity (16,000 GPUs × 1,700W per GPU). While substantial—equivalent to the average yearly consumption of 23,000 US households9—this demand is still small compared to large data centers, which can require hundreds of megawatts.How much will this increase by the end of the decade? Frontier training runs by 2030 are projected to be 5,000x larger than Llama 3.1 405B, reaching 2e29 FLOP.10 However, we don’t expect power demand to scale by as much. This is for several reasons.

First, we expect hardware to become more power-efficient over time. The peak FLOP/s per W achieved by GPUs used for ML training have increased by around 1.28x/year between 2010 and 2024.11 If continued, we would see 4x more efficient training runs by the end of the decade.

Second, we anticipate more efficient hardware usage in future AI training. While Llama 3.1 405B used FP16 format (16-bit precision), there’s growing adoption of FP8 training, as seen with Inflection-2. An Anthropic co-founder has suggested FP8 will become standard practice in frontier labs. We expect that training runs will switch over to 8-bit by 2030, which will be ~2x as power-efficient (e.g. The H100 performs around 2e15 FLOP/s at 8-bit precision, compared to 1e15 FLOP/s at 16-bit precision).12

Third, we expect training runs to be longer. Since 2010, the length of training runs has increased by 20% per year among notable models, which would be on trend to 3x larger training runs by 2030. Larger training run durations would spread out energy needs over time. For context, Llama 3.1 405B was trained over 72 days, while other contemporary models such as GPT-4 are speculated to have been trained over ~100 days. However, we think it’s unlikely that training runs will exceed a year, as labs will wish to adopt better algorithms and training techniques on the order of the timescale at which these provide substantial performance gains.

Given all of the above, we expect training runs in 2030 will be 4x (hardware efficiency) * 2x (FP8) * 3x (increased duration) = 24x more power-efficient than the Llama 3.1 405B training run. Therefore, on-trend 2e29 FLOP training runs in 2030 will require 5,000x (increased scale) / 24x ≈ 200x more power than was used for training of Llama 3.1 405B, for a power demand of 6 GW.

These figures are still relatively small compared to the total installed power capacity of the US, which is around 1,200 GW, or the 477 GW of power that the US produced on average in 2023.13 However, they are substantial compared to the power consumption of all US data centers today, which is around 20 GW,14 most of which is currently not AI related. Furthermore, facilities that consume multiple gigawatts of power are unprecedentedly massive—energy-intensive facilities today such as aluminum smelters demand up to around the order of a gigawatt of power, but not much more.15,16 In the following sections, we investigate whether such energy-intensive facilities will be possible

Power constraints for geographically localized training runs

For geographically localized training, whether done by a single data center or multiple data centers in a single campus, there are two options for supplying power: on-site generation, or drawing from (possibly multiple) power stations through the local electricity grid.

Companies today are already pursuing on-site generation. Meta bought the rights to the power output of a 350MW solar farm in Missouri and a 300MW solar farm in Arizona.17 Amazon owns a data center campus in Pennsylvania with a contract for up to 960 megawatts from the adjoining 2.5 GW nuclear plant. The primary motivation behind these deals is to save on grid connection costs and guarantee a reliable energy supply. In the coming years such data centers might allow unprecedentedly large training runs to take place—960 MW would be over 35x more power than the 27 MW required for today’s frontier training runs.

Could one acquire even more power through on-site generation? Currently, there are at least 27 power plants with capacity greater than 2.5 GW in the US,18 ranging in size up to the Grand Coulee 6.8GW hydroelectric plant in Washington. However, a significant portion of the power capacity from existing plants is likely already committed through long-term contracts.19 This limited availability of spare capacity suggests that existing US power plants may face challenges in accommodating large-scale on-site generation deals. The scarcity of spare energy capacity also breeds disputes. For example, Amazon’s bid for 960 MW of on-site nuclear power is challenged by two utilities seeking to cap Amazon at its current 300 MW purchase. They argue this arrangement evades shared grid costs; such disputes may also inhibit other on-site power deals.

More large-scale plants might be constructed in the coming years, but few have been built recently, and the most recent >3 GW power stations took around five years to build.20 It seems unlikely that any already-planned US power stations will be able to accommodate an on-site data center in the >3 GW range by 2030.21 Instead, moving to larger scales will likely require drawing electricity from the grid.

As a proxy, we can look at data center consumption trends in geographically localized areas. For instance, Northern Virginia is the largest data center hub in the US, housing nearly 300 data centers that are connected to 5 GW of power in peak capacity.22 The largest Northern Virginia electricity provider, Dominion, expects their data center load to increase 4x in the next fifteen years, for an implied 10% yearly growth rate. If Dominion and other regional providers stick to similar expansion plans, by 2030 we might expect data center power capacity in Northern Virginia to grow to around 10 GW.23

Some companies are investigating options for gigawatt-scale data centers, a scale that seems feasible by 2030. This assessment is supported by industry leaders and corroborated by recent media reports. The CEO of NextEra, the largest utility company in the United States, recently stated that while finding a site for a 5-gigawatt AI data center would be challenging, locations capable of supporting 1-gigawatt facilities do exist within the country. It is also consistent with a media report indicating that Microsoft and OpenAI are tentatively planning an AI data center campus for 2028 dubbed Stargate that will require “several gigawatts of power”, with an expansion of up to 5 GW by 2030.24

In sum, current trajectories suggest that AI training facilities capable of accommodating 2 to 5 GW of power demand are feasible by 2030. This assessment is based on three key factors: the projected growth of data center power capacity, exemplified by Northern Virginia’s expected increase from 5 GW to 10 GW; ambitious industry plans for gigawatt-scale data centers, such as the rumored Stargate campus; and utility company assessments indicating that 1 to 5-gigawatt facilities are viable in select US locations. For context, a 5 GW power supply such as the rumored Stargate campus would allow for training runs of 2e29 FLOP by 2030, accounting for expected advances in energy efficiency, and an increase in training duration to over 300 days.25 Training networks powered by co-located power plants or local electricity networks are unlikely to exceed 10 GW—as this would come close to the total projected power demand across all data centers in Northern Virginia.

Power constraints for geographically distributed training

Distributing AI training beyond a single data center can help circumvent local power constraints. Inter-data center distributed training involves spreading workloads across multiple data centers, which may or may not be in close proximity. This method has likely been used for large models like Gemini Ultra, allowing access to more hardware resources.26 Geographically distributed training extends this concept across wider areas, potentially tapping into separate electricity grids. Major tech companies are well-positioned for this approach, with data centers already spread across multiple regions. For example, Google operates data centers in 15 different U.S. states.27 This approach could enable larger-scale training operations by accessing a broader pool of power resources.

How much power could distributed data center networks access? As with local data center networks, we ground our discussion in historical trends, supplier forecasts and third-party projections of data center power growth. In a later section we discuss factors that would affect the feasibility of a major expansion in the US’s overall power supply, which could unlock even more power for data centers.

The potential for US data centers to access electricity is substantial and growing. To accurately assess this capacity, it’s crucial to distinguish between two key metrics: the average rate of actual energy consumption, which accounts for downtime and fluctuations, and the total peak capacity for which data centers are rated. We estimate that average power consumption across US data centers is over 20 GW today.28 Dominion has said that the data centers they serve demand 60% of their capacity on average, and estimates from experts we spoke to suggest that data centers consume around 40-50% of their rated capacity, on average. This suggests an overall capacity of 33 to 50 GW, or ~40 GW as a central estimate.29 In addition, according to SemiAnalysis’ data center industry model, total data center IT capacity in North America (the vast majority of which is in the US) was ~36 GW at the end of 2023 and will be ~48 GW at the end of 2024, which is consistent with this estimate.30

Figure 2: Reported and planned total installed IT capacity of North America data centers via SemiAnalysis’ data center industry model. Important note: to find total capacity, we must multiply these figures by PUE, which ranges from 1.2x for AI datacenters to 1.5x for other datacenters.

The potential for rapid expansion in data center power capacity is significant, as evidenced by multiple sources and projections. Historical data from SemiAnalysis indicates tracked data center capacity grew at an annual rate of ~20% between 2019 and 2023, per (see figure 2). Planned expansions in 2024 and 2025 aim to accelerate this, achieving 32% yearly growth if completed on time.

We can also look at growth projections from utilities companies to estimate a feasible growth rate for the overall data center industry. In Northern Virginia, Dominion is planning for a 10-15% annual growth rate31 in data center power in the coming years, following 24% annual demand growth from 2017 to 2023. NOVEC, another Virginia utility, expects 17% yearly growth in the coming years.

Finally, other independent estimates are consistent with a ~15% annual growth rate, such as from Goldman Sachs, which projects that data center power consumption will grow at an annual rate of 15% to 400 TWh in 2030 (for an average demand of ~46 GW), and from the Electric Power Research Institute (EPRI), which considers a 15% growth rate if there is a rapid expansion of AI applications.

Overall, an annual growth rate of 10-30% seems achievable. A central estimate of 15% growth would imply that US data center capacity could grow from 40 GW to up to 90 GW by 2030, or an increase of 50 GW. Note again that we are using a range of projections of actual growth to ground estimates of feasible growth, so this figure is arguably conservative.

Given power capacity for all data centers, how much power would be available for AI? Currently, the majority of US data centers are dedicated to non-AI uses such as internet services and cloud computing. For instance, SemiAnalysis tracks 3 GW of installed capacity across AI data centers in North America by the end of 2023. This corresponds to ~8% of total data center capacity.32 However, the share of power demand from AI data centers is on the rise, and we expect the AI power capacity share to become even larger in the coming years.

Existing forecasts of the annual growth in power demand for non-AI data centers center around 8% to 11%.33 At a 8% growth rate, demand for non-AI applications would increase from around 37 GW today to around 60 GW by 2030, leaving 90 GW - 60 GW ≈ 30 GW capacity for AI data centers. This would result in roughly a 30 GW / 3 GW ≈ 10x expansion in AI installed capacity, or roughly 47% annual growth on AI installed power capacity.34 This projection assumes a fixed allocation of growth to non-AI applications. However, if AI applications prove more profitable or strategically important, cloud providers could potentially reallocate resources, leading to even higher growth in AI installed power capacity at the expense of non-AI expansion.

Finally, we estimate how much of this capacity can be dedicated to a single training run. We must account for the fact that this added power capacity will likely be shared between different actors, such as Microsoft, Google, Amazon and so on. Our best guess is that the company with the largest share might get around 33% of the power capacity for AI data centers. Companies can front-load their capacity for training, leading to most of their capacity at the time of starting a large training run to be dedicated to training, perhaps by as much as 80%. In total, this means that 33% x 80% = 26% of the AI data center capacity might be used in a single training run.35

Given our estimates, the most well-resourced AI company in the US will likely be able to orchestrate a 30 GW x 26% ≈ 8 GW distributed training run by 2030. After accounting for uncertainties on the relevant growth rates and current capacities, we end up with a conservative estimate of 2 to 45 GW for the largest supply that a developer will be able to muster for distributed training, which would allow for training runs between 2e28 to 2e30 FLOP (see figure 3 in a later section). For context, our earlier analysis suggested that single-campus facilities might achieve 2 to 5 GW capacity by 2030. The upper end of our distributed training estimate (45 GW) significantly exceeds this single-campus projection, indicating the potential for distributed training to overcome power bottlenecks.

Feasibility of geographically distributed training

Geographically distributed training runs, which spread workloads across multiple data centers to alleviate power constraints, are likely to be technically feasible at the scale projected in our analysis. This approach builds upon existing practices in AI model training, where computations are already massively parallelized across numerous GPUs. The fundamental structure of AI training facilitates geographical distribution: datasets are divided into batches, with model weight updates occurring only once per batch. In a distributed setup, these batches can be split across various locations, requiring data centers to synchronize and share gradient updates only at the conclusion of each batch.

Evidence for the viability of this approach exists in current practices. For instance, Google’s Gemini Ultra model was reportedly trained across multiple data centers, demonstrating the practicality of geographically dispersed training.36 While the exact geographic spread of the data centers used for Gemini Ultra is unclear, its training provides a concrete example of large-scale distributed operations.

The feasibility of widely dispersed data centers in distributed training is constrained by latency. In a scenario where major U.S. data centers are connected by an 11,000 km fiber optic loop (a high-end estimate), the communication latency would be approximately 55ms.37 Synchronization would require two round trips down the network, taking 110ms. This is using a travel speed that is two-thirds the speed of light, so this latency cannot be reduced as long as we are doing fiber optic communication. So if a training run is completed within 300 days, it could involve at most 300 days / 110ms = 240 million gradient updates.

We are uncertain how large batches can be without compromising training effectiveness. We will assume it to be 60 million tokens—which is speculated to match the largest batch size achieved by GPT-4 during training. This would allow for ~1e16 tokens (240M batches x 60M tokens/batch) to be seen during training, which under Chinchilla-optimal scaling would allow for a ~6e31 FLOP training run.38 In other words, latency is not likely to be the binding constraint, even when pessimistically assuming a data center network involving very distant data centers.

Beyond latency, bandwidth also influences the feasibility of large-scale distributed training. Current data center switch technology, exemplified by the Marvell Teralynx 10, provides insight into achievable bandwidth. This data center switch supports 128 ports of 400 Gps each, for a total bandwidth of 51.2 Tbps.39 Transmitting the gradient updates for a 16T parameter model at 8-bit precision using a standard two-stage ring all-reduce operation would then take 2 x 16T x 8 bit / 51.2 Tbps = 4.9 seconds per trip. Adding 110ms of latency per all-reduce as before, the total time per all-reduce would be 5 seconds in total. Given Chinchilla scaling, this model size would maximize the scale of training that can be accomplished in under 300 days of training, leading to a 3e28 FLOP training run.40

However, achievable bandwidths are likely to be much higher than what can be managed by a single Teralynx 10 ethernet switch. First, links between data centers pairs can be managed by multiple switches and corresponding fibers, achieving much larger bandwidths. For instance, each node in Google’s Stargate network featured 32 switches managing external traffic. In a ring all-reduce setup, a 32-switch data center could dedicate 16 switches to manage the connection with each of its two neighbors. Given the precedent of Google’s B4 network, we think that switch arrangements of 8 to 32 switches per data center pair should be achievable.41

Second, better switches and transceivers will likely exist in the future, increasing the achievable bandwidth. The broader trend of ASIC switches suggests a 1.4 to 1.6x/year increase in bandwidth,42 which would result in 380 to 850 Tbps ethernet switches by the end of the decade.43

Our final estimate of the achievable inter data center bandwidth by 2030 is 4 to 20 Pbps, which would allow for training runs of 3e29 to 2e31 FLOP. In light of this, bandwidth is unlikely to be a major constraint for a distributed training run compared to achieving the necessary power supply in the first place.

Expanding bandwidth capacity for distributed training networks presents a relatively straightforward engineering challenge, achievable through the deployment of additional fiber pairs between data centers. In the context of AI training runs potentially costing hundreds of billions of dollars, the financial investment required for such bandwidth expansion appears comparatively modest.44

Modeling energy bottlenecks

We conclude that training runs in 2030 supported by a local power supply could likely involve 1 to 5 GW and reach 1e28 to 3e29 FLOP by 2030. Meanwhile, geographically distributed training runs could amass a supply of 2 to 45 GW and achieve 4 to 20 Pbps connections between data center pairs, allowing for training runs of 2e28 to 2e30 FLOP.45 All in all, it seems likely that training runs between 2e28 to 2e30 FLOP will be possible by 2030.46 The assumptions behind these estimates can be found in Figure 3 below.

How much further could power supply be scaled?

At this point, it is unclear to us how far power provision for data centers could scale if pursued aggressively. So far, we have grounded our discussion in the existing power supply for data centers as well as growth projections from utilities, and the results of our model reflect these estimates. How could these numbers change if there is an unprecedented investment in growing the power supply?47

Building new power plants seems reasonably affordable and scalable, but there are also important constraints at the grid level (more below). Natural gas and solar plants can be built relatively quickly, in under two years,48 while other types of power, such as nuclear and hydroelectricity, require longer timeframes,49 and there are no full-scale nuclear plants currently in progress in the US.50

How much would it cost to grow the power supply? As a baseline for the cost of expanding power, we can look at the construction and operation costs of a gas power plant.51

A gas power plant is estimated to have an overnight capital cost on the order of $2,500 per kilowatt with 95% carbon capture, or ~$900 without carbon capture, while the operation cost is roughly 4.5 cents per KWh (and around 4 cents more if carbon capture is included52). However, we should note that the estimates with carbon capture are theoretical, as gas with carbon capture has not been deployed at scale. Gas without carbon capture is cheaper but could be more constrained by politics and corporate commitments (more on this below). Meanwhile, data centers pay around $8.7 cents per KWh for electricity. If we assume a 100% premium on top of the operation cost, data centers would pay ~17 cents/KWh for electricity generated by natural gas with carbon capture.

Meanwhile, an H100 GPU requires 1,700W of power and costs ~$30,000, or ~$17,000 per kilowatt. This means that powering a training run that uses H100s with natural gas would require ~$1500-$4000 in capital costs per H100, and ~$2000 in variable costs.53 So if AI developers had to fund the construction of all the power plants required, this would only increase their hardware costs by around ($4000 + $2000) / $30,000 = 20%. However, this becomes worse after accounting for hardware trends, despite improving efficiency. GPUs are becoming more energy efficient in Watt per FLOP/s at a rate of 2x per three years, but they are also becoming more price-performant in FLOP/dollar at a rate of 2x per two years. So in 2030 each dollar spent on GPUs buys 8x more FLOP, requiring 4x less power, for twice as much power per dollar, and the cost of power would be 40% of the cost of GPUs.

Given these figures, the construction of arbitrarily large power supply networks for data centers is affordable, relative to the cost of the required chips, if power can be scaled up at the current marginal price. But at very large scales beyond ~100 GW, it would require unprecedented effort.

Despite AI developers’ potential willingness to invest heavily in solving power bottlenecks, several obstacles may limit the amount of power available for AI training. Transmission lines, essential for connecting power plants to data centers, typically take about 10 years to complete and often face political challenges. The process of connecting new power generation to the grid, known as interconnection, has become increasingly time-consuming, with average queue durations in the US recently reaching five years.54 Additionally, new electrical transformers, crucial for power distribution, can take up to two years to be delivered. These grid-level bottlenecks create uncertainty about the ability to scale up power supply significantly faster than historical growth rates or the most aggressive utility projections, even with substantial financial investments. The long lead times and complex infrastructure requirements make rapid expansion of power capacity challenging, potentially constraining the growth of large-scale AI training operations.

Another potential constraint is political and regulatory constraints blocking or delaying the construction of power plants, as well as supporting infrastructure such as transmission lines and natural gas pipelines. This could limit or slow down a large scale-up of power. This political constraint may be malleable,55 especially if there is much government support for facilitating the power infrastructure to support development of advanced AI systems.

Scaling up AI training power through natural gas presents its own set of challenges. Large yearly expansions of capacity amounting several dozens of gigawatts have precedent in the US. For example, the US installed a net 250 GW of natural gas from 2000 to 2010, averaging 25 GW per year. But this approach would necessitate increased gas drilling and the construction of additional pipelines to transport the gas to power plants. While at least one analysis suggests the US could drill enough gas to power an extra 100 GW for AI training, there are differing opinions on the viability of this approach. For instance, NextEra’s CEO has identified pipeline construction as a potential obstacle, arguing that renewable energy might be a more feasible option for powering AI data centers.

Finally, there is a tension between massive power growth and the US government’s goal of switching to 100% carbon pollution-free energy by 2035, as well as pledges from the three largest cloud compute providers—Google, Microsoft, and Amazon—to become carbon neutral by 2030. This could limit what types of energy sources could be considered56 (though these commitments may not prove to be binding if there is sufficient economic pressure to scale up AI). For instance, fossil fuel plants may need to be equipped with carbon capture to hold to these commitments, and carbon capture has not yet been tested at scale and may not be ready for widespread deployment by 2030. This tension is especially acute for coal. Coal power may be a source of slack in the power supply, since coal plants are running at ~50% capacity, down from ~70% in 2008, despite many being designed to provide reliable “baseload” power.57 However, coal is much more carbon-intensive than gas.

Given these potential bottlenecks, it is unclear to what degree US power supply can be scaled up arbitrarily by 2030 at a cost resembling the current margin. For this reason, we conservatively assume that power supply will not be scaled beyond the levels forecasted by utilities and independent analysts.

Chip manufacturing capacity

AI chips, such as GPUs, provide the compute required to train AI models and are a key input in AI scaling. Growth in GPU clusters has been the main driver of compute growth in the past few years, and higher performance, lower latency, higher memory bandwidth GPUs make it feasible to do ever larger training runs. AI scaling could therefore be constrained by the number of state-of-the-art GPUs that chipmakers can produce.

We model future GPU production and its constraints by analyzing semiconductor industry data, including projected packaging capacity growth, wafer production growth, and capital expenditure on fabrication plants (fabs). Our projections indicate that GPU production through 2030 is expected to expand between 30% to 100% per year, aligning with CoWoS packaging and HBM production growth rates.

In our median projection, we expect enough manufacturing capacity to produce 100 million H100-equivalent GPUs for AI training, sufficient to power a 9e29 FLOP training run. This estimate accounts for GPUs being distributed among multiple AI labs and partially used for model serving. However, this projection has significant uncertainty, primarily due to unknowns in advanced packaging and high-bandwidth memory capacity expansions. Our estimates range from 20 million to 400 million H100 equivalents, potentially enabling training runs between 1e29 and 5e30 FLOP (5,000 to 250,000 times larger than GPT-4).

Current production and projections

Recent years have seen rapid growth in data center GPU sales. Nvidia, which has a dominant market share in AI GPUs, reportedly shipped 3.76 million data center GPUs in 2023, up from 2.64 million units in 2022.58 By the end of 2023, 650,000 Nvidia H100 GPUs were shipped to major tech companies. Projections for 2024 suggest a potential threefold increase in shipments, with expectations of 1.5 to 2 million H100 units shipped. This volume would be sufficient to power a 6e27 FLOP training run.59

However, if we extrapolate the ongoing 4x/year trend in training compute to 2030, we anticipate training runs of around 2e29 FLOP. A training run of this size would require almost 20M H100-equivalent GPUs.60 If we suppose that at most around 20% of total production can be netted by a single AI lab, global manufacturing capacity would need to reach closer to 100M H100-equivalent GPUs by 2030. This far exceeds current production levels and would require a vast expansion of GPU production.61

TSMC, the company that serves as Nvidia’s primary chip fab, faces several challenges in increasing production. One key near-term bottleneck is chip packaging capacity—particularly for TSMC’s Chip-on-wafer-on-Substrate (CoWoS) process, which is Nvidia’s main packaging method for their latest GPUs. This packaging process combines logic units with high-bandwidth memory (HBM) stacks into ready-to-use AI chips. Packaging is difficult to scale up rapidly, as new facilities require complex equipment from many vendors. Constructing these facilities also requires specialized training for personnel. These constraints have limited TSMC’s AI chip output, despite strong demand from customers like Nvidia.

TSMC is directing significant efforts to address this constraint. The company is rapidly increasing its CoWoS packaging capacity from 14,000-15,000 wafers per month in December 2023 to a projected 33,000-35,000 wafers per month by the end of 2024.62 To further expand this capacity, TSMC opened a new fab in 2023, Advanced Backend Fab 6. At full capacity, this fab could process up to 83,000 wafers per month, which would more than double TSMC’s advanced packaging volume.63 TSMC has also announced plans to increase its packaging capacity by 60% annually through 2026. Recent scale-ups of CoWoS capacity have ranged from 30% to 100% annually. If this trend continues, the number of dies of a fixed size that can be produced will likely increase at a similar rate.64,65

The production of HBM chips themselves is another significant constraint on GPU manufacturing. HBM chips are nearly sold out until 2026. While HBM volume is expected to increase 2-3x from 2023 to 2024, much of this growth comes from reallocating capacity from DRAM, which is another class of lower-end memory chips. SK Hynix, the current HBM leader and Nvidia’s main supplier, projects a 60% annual growth in HBM demand in the medium to long-term (likely referring to revenue), while one analyst firm estimates 45% annual growth in production volume from 2023 to 2028.

HBM production and TSMC’s CoWoS packaging capacity, two key constraints in GPU manufacturing, are projected to expand at similar rates in the coming years. Based on TSMC’s announced plans and recent growth trends in CoWoS capacity, which have ranged from 30% to 100% annually, we estimate GPU production will grow at a similar rate in the near term.

Despite the substantial growth in GPU production, wafer production itself is not likely to be the primary limiting factor. Currently, data center GPUs represent only a small portion of TSMC’s total wafer production. Our central estimate of TSMC’s current production capacity for 5nm and 3nm process nodes is 2.2M wafers per year as of early 2024.66 The projected 2 million H100 GPUs for 2024 would only consume about 5% of the 5nm node’s capacity.67 Even with the projected growth rates, it’s unlikely that GPU manufacturing will dominate TSMC’s leading-edge capacity in the immediate future. Instead, the main constraints on expanding GPU production appear to be chip packaging and HBM production.

However, it is plausible that GPU manufacturing could eventually come to dominate TSMC’s leading-edge nodes. There is precedent for such a scenario; in 2023, Apple absorbed around 90% of TSMC’s 3nm production. Given the high profit margins on AI chips, Nvidia could potentially outbid competitors like Apple and Qualcomm for TSMC’s advanced wafer capacity. While we think this is plausible, this scenario is not featured in our mainline analysis.

Modeling GPU production and compute availability

TSMC forecasted AI server demand to grow at 50% annually over the next five years. Given TSMC’s historical 5 percentage point yearly increase in operating margins, which investors expect to continue due to price hikes, we estimate actual GPU volume growth at around 35% per year.68 This is conservative compared to other projections: AMD expects 70% annual growth for data center chips through 2027, implying about 60% annual GPU volume growth assuming similar price increases.69 These more aggressive estimates align closely with near-term CoWoS packaging and HBM production scale-up projections discussed above, lending them credibility. We take this range of estimates into account, and project production of GPU dies to expand somewhere between 30% and 100% per year.

We expect that there will be enough wafer capacity to sustain this expansion. TSMC’s historical trends show capex growth of 15% annually and wafer capacity expansion of 8% yearly from 2014 to 2023.70 TSMC might increase its capex dedicated to expanding GPU production and substantially expand the production of GPU-earmarked-wafers, packaging and other parts of the production. If TSMC accelerates its capex growth to match their expected growth in the AI server market of 50% annual growth, the historical relationship between input and output growth suggests that total wafer capacity could expand by 27% per year.71 Overall, this points to a growth rate of leading-edge wafer production of between 5% and 20% per year.

We have appreciable uncertainty about current leading-edge wafer production, and assume that this is somewhere between 100k to 330k/month. At 5 to 20% yearly growth, we project the total stock of leading-edge wafers produced by 2030 to be between 10M and 37M. Based on TSMC’s and others’ projections, we expect around 20% of these wafers to be dedicated to producing data center GPUs.

These projections indicate that 2e30 to 4e31 FLOP/year worth of global stocks of H100 equivalents will be produced in aggregate. Of course, only some fraction of this will be dedicated to a single training run, since individual labs will only receive some small fraction of shipments, labs will earmark their GPUs to inference and other experiments, and training runs will likely last less than a year. At current rates of improvements in hardware and algorithms and growing budgets for AI, training runs will likely not exceed six months if hardware or software progress does not slow. We assume that training runs will last around 2 to 9 months; on the higher end if progress in hardware and software stalls, and the lower end if progress accelerates relative to today.

It is likely that AI chips will be distributed across many competing labs, with some lab owning some non-trivial fraction of global compute stocks. For instance, Meta reportedly bought one fourth of H100 shipments to major companies in 2023. We estimate that recently, the share of datacenter GPUs owned by a single lab at any point in time might be somewhere between 10% and 40%.

Of this allocation, some fraction will likely be tied up with serving models and unavailable for training. It is difficult to know what fraction this might be. However, we can use a simple heuristic argument. A simple analysis suggests AI labs should allocate comparable resources to both tasks. If this holds, and compute for training continues to grow 4x per year, then we should expect about 80% of the total available compute to be used to train new models.72

Putting all this together leads us to the following picture. On the median trajectory, about 100M H100-equivalents could, in principle, be dedicated to training to power an 9e29 FLOP training run. However, this projection carries significant uncertainty, with our estimates ranging from 20 million to 400 million H100-equivalents, corresponding to 1e29 to 5e30 FLOP. To establish an upper bound, we considered a hypothetical scenario where TSMC’s entire capacity for 5nm and below is devoted to GPU production, from now until 2030. In this case, the potential compute could increase by an order of magnitude, reaching 1e30 to 2e31 FLOP. This upper limit, based on current wafer production projections, illustrates the maximum possible impact on AI training capabilities if existing constraints in packaging, HBM production, and wafer allocation were fully resolved. Figure 4 below illustrates these estimates, and lists the assumptions behind them.

Data scarcity

Scaling up AI training runs requires access to increasingly large datasets. So far, AI labs have relied on web text data to fuel training runs. Since the amount of web data generated year to year grows more slowly than the data used in training, this will not be enough to support indefinite growth. In this section, we summarize our previous work on data scarcity, and expand it by estimating further possible gains in scale enabled by multimodal and synthetic data.

The largest training datasets known to have been used in training are on the order of 15 trillion tokens of publicly available text and code data.73 We estimate that the indexed web contains around 500 trillion tokens after deduplication, 30 times more data than the largest known training datasets. This could be as low as 100T if only looking at already compiled corpora like CommonCrawl, or as high as 3000T if also accounting for private data.74

Since the Chinchilla scaling laws suggest that one ought to scale up dataset size and model size proportionally, scaling up training data by a factor of 30x by using the entirety of the indexed web would enable AI labs to train models with 30x more data and 30x more parameters, resulting in 900x as much compute, i.e. up to 8e28 FLOP if models are trained to be Chinchilla-optimal.75,76,77

If the recent trend of 4x/year compute scaling continues, we would run into this “data wall” for text data in about five years. However, data from other modalities and synthetic data generation might help mitigate this constraint. We will argue that multimodal data will result in effective data stocks of 450 trillion to 23 quadrillion tokens, allowing for training runs of 6e28 to 2e32 FLOP. Furthermore, synthetic data might enable scaling much beyond this if AI labs spend a significant fraction of their compute budgets on data generation.78

Copyright restrictions

Published text data may be subject to copyright restrictions that prohibit its use in training large language models without permission. While this could theoretically limit the supply of training data, several factors mitigate this concern in practice. The primary consideration is the ongoing legal debate surrounding whether the inclusion of published text in a general-purpose model’s training data constitutes “fair use”. However, even if this debate is settled in favor of copyright holders, there are further practical considerations that complicate the enforcement of such restrictions.

Many large repositories of public web data, such as Blogspot, allow individual authors to retain copyright of their content. These individuals, however, may face significant challenges in proving the inclusion of their content in training data and may lack the capacity or desire to engage in complex litigation processes. This practical barrier can prevent individual content creators from pursuing legal action against AI companies using their data.

On the other hand, larger publishers like newspapers typically have the resources to litigate for copyright infringement. However, these entities can also negotiate agreements with AI companies to license their data. For instance, OpenAI has successfully reached agreements with several major publishers, including StackOverflow, The Atlantic, TIME, and Vox Media. These agreements demonstrate that AI companies can often navigate copyright restrictions effectively through negotiation and collaboration with content providers.

Ultimately, the full extent to which copyright restrictions will limit the supply of training data for large language models remains uncertain. While legal and practical challenges exist, it seems unlikely that these constraints will significantly reduce the overall volume of available data. The vast amount of content on the internet, combined with the complexities of enforcement and the potential for licensing agreements, suggests that AI companies will still have access to substantial datasets. However, it’s worth noting that copyright restrictions may disproportionately affect high-quality sources such as books and reputable news outlets. These sources often contain well-curated, factual, and professionally edited content, which could be particularly valuable for training. As a result, while the quantity of training data may not be drastically reduced, there could be a notable impact on the quality and diversity of the most authoritative sources available for AI training.

Multimodality

AI labs could leverage other data modalities such as image or video.79 Current multimodal foundation models are trained on datasets where 10-40% is image data.80 This data is used to allow models to understand and generate images. Given the usefulness of multimodal understanding, we expect future datasets to include a significant portion of non-text data purely for this purpose. That said, to significantly expand the stock of data, the portion of multimodal data would have to become far greater than that of text data.

Audio, image or video modeling will be valuable enough on its own that AI labs will scale pure audiovisual training. Strong visual abilities could enable models to act as assistants embedded within workflows to organize information or operate a web browser. Models that have fluent, fast, multilingual speech abilities are likely to enable much improved personal voice assistant technology, realtime translation, customer service and more fluid interactions compared to text-only. While current vision models use much less compute than language models,81 in a scenario where text data is a bottleneck but image data is plentiful, AI labs might start dedicating more resources to image models.

Additional modalities like protein sequences or medical data are also valuable. However, the stock of such data is unlikely to be large enough to significantly expand the stock of available training data.82

Multimodal data can further aid language understanding in various ways. Textual data can be transcribed from audio, image and video data, which could further expand the stock of text-related data.83 More speculatively, non-text data may improve language capabilities through transfer learning or synergy between modalities. For example, it has been shown that combining speech and text data can lead to improved performance compared to single-modality models, and it is suggested that such synergies improve with scale. However, research on transfer learning between modalities is scarce, so we can’t conclude with certainty that transfer learning from multimodal data will be useful.

How much visual data would be available for training if one of these scenarios came to pass? The internet has around 10 trillion seconds of video, while the number of images may also be close to 10T.84 It’s challenging to establish a rate of equivalence between these modalities and text data. Current multimodal models such as Chameleon-34B encode images as 1024 tokens, but we expect that as multimodal tokenizers and models become more efficient this will decrease over time. There are examples of efficient encoding of images with as few as 32 tokens, which after being adjusted by typical text dictionary size would result in 22 tokens per image.85 We take 22 tokens per image and second of video as a central guess, which means that image and video multimodality would increase the effective stock of data available for training by roughly 400T tokens.86 This suggests that image and video content might each contribute as much as text to enable scaling. This would allow for training runs ten times larger than if trained purely on text data.

Moreover, there are probably on the order of 500B-1T seconds of publicly available audio on the internet.87 Neural encoders can store audio at <1.5 kbps while being competitive with standard codecs at much higher bitrate. This corresponds to <100 language-equivalent tokens per second of audio. So it seems likely that total stored audio is on the order of 50-100T trillion tokens, not far from text and image estimates.88 Hence, this would probably not extend the stock of data by a large factor.

After adding the estimates from all modalities and accounting for uncertainties in the total stock of data, data quality, number of epochs, and tokenizer efficiency, we end up with an estimate of 400 trillion to 20 quadrillion effective tokens available for training, which would allow for training runs of 6e28 to 2e32 FLOP by 2030 (see Figure 5).

Given how wide this interval is, it may be useful to walk through how the high end of the range could be possible. Note that these numbers are merely illustrative, as our actual confidence interval comes from a Monte Carlo simulation based on ranges of values over these parameters.

A high-end estimate of the amount of text data on the indexed web is two quadrillion tokens (Villalobos et al, 2024). Meanwhile, a high-end estimate of the number of images and seconds of video on the internet is 40 trillion. If we also use a higher-end estimate of 100 tokens per image or video-second, this would mean four quadrillion visual tokens, or six quadrillion text and visual tokens. If we also assume that this stock of data doubles by 2030, 80% is removed due to quality-filtering (FineWeb discarded ~85% of tokens), and models are trained on this data for 10 epochs, this would lead to an effective dataset size of ~20 quadrillion tokens. See Figure 5 for a full list of these parameters and our reasoning for the value ranges we chose.

Synthetic data

In our projections we have only considered human-generated data. Could synthetic data generation be used to greatly expand the data supply? Several important milestones in machine learning have been achieved without relying on human data. AlphaZero and AlphaProof learned to play games and solve geometry problems respectively, matching or surpassing human experts by training purely on self-generated data. Language models fine-tuned on synthetic data can improve their ability to code and answer reasoning questions. Small LLMs trained on carefully curated synthetic data can achieve comparable or superior performance with significantly fewer parameters and less training data compared to larger models trained on web-scrape text. Large-scale frontier language models like Llama 3.1 use synthetic data to augment capabilities in areas where collecting high-quality human-annotated data might be challenging or costly, such as long-context capabilities, multilingual performance, and tool use capabilities.

One key reason to expect it should be possible to spend compute to generate high-quality synthetic data is that it’s often easier to verify the quality of an output than it is to generate it. This principle most clearly applies in domains where we can create explicit correctness or quality signals. For example, in coding tasks, we can check if generated code passes unit tests or produces correct outputs for sample inputs. In mathematics, we can detect logical or arithmetic mistakes and correct them.

This process enables developers to use compute to generate numerous candidate solutions. They can then systematically verify the correctness or quality of each generated solution, keeping only the high-quality examples while discarding the subpar ones. This approach can computationally create datasets filled with high-quality, synthetic examples. For these tasks, one can expend more inference compute to generate outputs of higher quality.

The verification-easier-than-generation principle may extend beyond coding to various other domains. For instance, it’s often easier to review a research paper for quality and novelty than to write an original paper. Similarly, evaluating the coherence and plausibility of a story is typically less challenging than writing an engaging story from scratch. In these cases, while traditional symbolic systems might struggle with verification, modern AI systems, particularly large language models, have demonstrated evaluation capabilities comparable with human verifiers. This suggests that AI-driven verification could enable creating high-quality synthetic data in these complex domains.

There are additional mechanisms which can be used to generate high-quality synthetic data. For example, it is often the case that a model cannot produce high-quality outputs directly, but it can produce them by combining several smaller steps. This is the key idea behind chain-of-thought prompting, which can be used to teach models increasingly complex arithmetic by bootstrapping from simpler examples.

Generating endless data

Even if it is technically possible to generate useful synthetic data for a wide range of tasks, the computational overhead of generation might preclude its usage in practice. We can try to estimate how much additional compute would be needed to scale models using synthetic data, compared to a baseline of scaling natural datasets.

Suppose that we have access to a frontier model which we will use as a data generator, and want to train a target model with 10x more compute than the generator. We want the new model to achieve a similar quality than would be obtained by training it on natural data. In previous work, we quantified the extent to which spending more compute at inference time improves the quality of outputs that models can achieve. Concretely, chain-of-thought was found to lead to a compute-equivalent-gain of 10x while increasing inference costs by 10x.

This means that increasing the compute usage of the generator during inference by 10x (by generating outputs step by step) raises the quality of the outputs to the level of a model trained on 10x more compute. We can then train our new model on these high-quality outputs to reach the desired level of performance.

Assuming the new model is trained in a compute-optimal fashion, the computational cost of generating the new training dataset would be similar to the cost of training the new model.89 So using synthetic data would double the compute requirements to train a model, compared to using natural data.

Spending so much compute on synthetic data generation for training would not be unprecedented: DeepMind spent roughly 100x more compute on game simulations to generate data for AlphaGo Zero than in training the underlying model. However, this is very speculative; for example we haven’t yet seen such a technique applied successfully at scale to frontier model pretraining.

There are several obstacles for using synthetic data. The first is the possibility of model collapse: over-reliance on synthetic data might cause a degeneration or stagnation of capabilities. It’s unclear if the self-correction mechanisms we have introduced are enough to avoid this outcome, although there are promising signs.

Increasing compute allocation for data generation can enhance synthetic training data quality through two types of approaches: generating many candidates then filtering for quality, and employing compute-intensive methods like chain-of-thought reasoning to produce superior outputs directly. However, this strategy may face diminishing returns as compute investment grows. When verification or quality estimation processes are imperfect, improvements in data quality may plateau despite additional compute allocation.90

Synthetic data is already useful for domains where verification is straightforward such as math and coding or in a small set of domains where collecting high-quality human-annotated data might be challenging or costly, such as tool use, long-context data or preference data. Based on this success, and the intuitions we’ve discussed, we find it plausible that high-quality synthetic data generation is possible in a wide range of fields, beyond what has been demonstrated until now, but this is still uncertain. In this case, data availability might not pose a constraint on scaling, as more could be generated on demand by spending enough compute.

We expect synthetic data to likely be useful for overcoming data bottlenecks. However, the research on the topic is nascent and the state of existing evidence is mixed, and so in this article we conservatively rely on estimates from multimodal data, excluding all types of synthetic data.

Latency wall

Another potential constraint to AI scaling is latency. There is a minimum time required for a model to process a single datapoint, and this latency grows with the size of the model. Training data is divided into batches, where the data in a batch can be processed in parallel, but there are limits to how large these batches can be. So a training run must last at least as long as the time to process one batch, multiplied by the number of training batches (training dataset size divided by the batch size). Given a finite training run duration, this dynamic limits the size of a model and how much data it can be trained on, and thus the total size of the training run.91

This constraint does not pose much of an issue for today’s training runs because typical latencies are very small. However, it could become substantially more important in larger training runs as the minimum latency increases with model size due to the sequential nature of operations across layers.

Training runs can partially manage this latency issue by increasing the batch size, allowing more data to be processed in parallel. In particular, increasing the batch size improves the convergence of stochastic gradient descent, at the cost of more computational resources. This enables one to speed up training at the cost of more compute per batch size, though without substantially increasing the overall compute needed for training. However, beyond a “critical batch size”, further batch increases yield drastically diminished returns per batch. It is therefore not efficient to scale batches indefinitely, and training a model on ever-larger datasets requires increasing the number of batches that need to be processed in sequence.

To get a quantitative sense of the size of this bottleneck, we investigate the relevant sources of latency in the context of training large transformer models. Given batch sizes of 60 million tokens (speculated to be the batch size of GPT-4), we arrive at training runs between 2e30 to 2e32 FLOP, which would incur at least 270 to 400 microseconds (µs) of NVLINK and Infiniband communication latency per layer.

However, this may be an underestimate because we expect that the critical batch size likely scales with model size. Under a speculative assumption that batch size can be scaled as roughly the cube root of model size, we estimate the feasibility of training runs around 3e30 to 1e32 FLOP, which would incur at least 290 to 440 µs of latency with modern hardware.92

Latency wall given intranode latencies

We first focus our analysis on intranode latencies, meaning latencies associated with a single node (i.e. a server) hosting multiple GPUs. In this case there are two types of latency that are most pertinent: the kernel latency captures how long a single matrix multiplication or “matmul” takes, and the communication latency measures how long it takes to propagate results between the GPUs.

We anchor our estimates of these two latencies to commonly used machine learning hardware. Experiments by Erdil and Schneider-Joseph (forthcoming) indicate a kernel latency on the order of 4.5 µs for an A100 GPU. Meanwhile, the communication latency in an 8-GPU NVLINK pod for an all-reduce is on the order of 9.2 µs.93 The total base latency per matmul in an NVLINK pod is then in the order of 13.7 µs.

The latency of each transformer layer follows straightforwardly from this. In particular, each layer of a standard decoder-only transformer model involves four consecutive matrix multiplications,94 and we must pass each layer twice (for the forward and backward passes). Therefore, the minimal latency per layer and batch is eight times that of a single matrix multiplication, i.e. \( 8 \times 13.7\,\mu\text{s} = 110\,\mu\text{s} \).

To finish estimating the largest training run allowed by the latency wall, we need to make some assumption on scaling the number of layers and the amount of training data. As a heuristic, let’s assume that the number of layers in a model is roughly the cube root of the number of parameters,95 and that the training dataset size will scale proportionally with the number of parameters, following the Chinchilla rule. In particular, assuming a 120 µs minimum latency per layer and a batch size of 60M tokens, we would find that the largest model that can be trained in nine months is 700T parameters, which allows for Chinchilla-optimal models of up to 6e31 FLOP. Note that this estimate might prove to be too optimistic if the latencies per all-reduce from the NVIDIA Collective Communications Library (NCCL) prove to be slower than reported for intermediate size messages.96,97,98

Latency wall given latencies between nodes

So far we have only accounted for intranode (within-node) latencies. This makes sense to some extent; tensor parallelism is often entirely conducted within 8-GPU NVLINK pods precisely to avoid communicating between nodes for each sequential matrix multiplication. However, continued scaling would require internode communication, increasing the latency.

In particular, using a standard InfiniBand tree topology, the latency between nodes scales logarithmically with the number of nodes communicating. Using the NVIDIA Collective Communications Library (NCCL), an all-reduce operation will take at least \( t = 7.4\,\mu\text{s} + 2 \times \left(N \times 0.6\,\mu\text{s} + \log_2(M) \times 5\,\mu\text{s}\right) \) , where \( N \) is the number of GPUs within a pod participating, and \( M \) is the number of pods participating (this includes both the communication and kernel latencies).99

For a training run using 2D tensor parallelism, the number of pods corresponds be the number of GPUs coordinating for a 2D tensor parallel calculation. In particular, a cluster performing TP-way 2D tensor parallel training requires a synchronization of TP GPUs, for which we will have 2.75 GPUs on average communicating within each 8-GPU pod, and \( \sqrt {\text{TP}} / 2.75 \) pods total.

For instance, a 300M H100 cluster using 2,000-way 2D tensor parallelism would involve then \( \sqrt {2000} / 2.75 = 16 \) pods per all-reduce, incurring a 7.4 µs + 2 x (2.75 x 0.6 µs + log2(16) x 5 µs) = 50 µs latency, which corresponds as before to a 8 x 50 µs = 400 µs latency per layer and batch. This is the cluster size that would allow training the largest possible model in under nine months and with 60M batch size, reaching 7e30 FLOP given projections of hardware efficiency.100

How can these latencies be reduced?

Communication latency might be significantly reduced with improvements to the cluster topology. For instance, a mesh topology could bypass the logarithmic scaling of the internode latency, at the cost of a more complex networking setup within data centers (since you would need to have direct connections between all nodes).

Another solution might involve larger servers with more GPUs per pod, to reduce the internode latency, or more efficient communication protocols—for instance, for training Llama 3.1 Meta created a fork of the NVIDIA Collective Communications Library (NCCL) called NCCLX that is optimized for high latency setups, which they claim can shave off tens of microseconds during communications.

Alternatively, we might look into ways to increase the batch size or reduce the number of layers. Previous research by OpenAI relates the critical batch size – after which you see large diminishing returns to training – to how dispersed are gradients with respect to one’s training data. Based on this, Erdil and Schneider-Joseph (forthcoming) conjecture that the batch size might be scaled with the inverse of the reducible model loss, which per Chinchilla scales as roughly the cube root of the number of model parameters.101 If this holds, it would push back the latency wall by an order of magnitude of scaling, see figure 7 below.

Little work has been done on how the number of layers ought to be scaled and whether it could be reduced. Some experimental work indicates that it is possible to prune up to half of the intermediate layers of already trained transformers with a small degradation of performance. This indicates that removing some layers before training might be possible, though it is far from clear. For the time being, we ignore this possibility.

After accounting for uncertainties, we conclude that scaling past 1e32 FLOP will require changes to the network topology, or alternative solutions to scale batch sizes faster or layers slower than theoretical arguments would suggest.

What constraint is the most limiting?

So far we have examined four main bottlenecks to AI scaling in isolation. When considered together, they imply that training runs of up to 2e29 FLOP would be feasible by the end of the decade. This would represent a roughly 10,000-fold scale-up relative to current models, and it would mean that the historical trend of scaling could continue uninterrupted until 2030 (see figure 7).102

The dark shaded box corresponds to an interquartile range and light shaded region to an 80% confidence interval.

The most binding constraints are power and chip availability—see figure 7. Of these two, power may be more malleable—the energy industry is less concentrated and there is precedent for 100 GW expansion of the power supply, which suppliers ought to be able to execute if planning three to five years in advance. Expanding chip manufacturing faces multiple challenges: key processes like advanced packaging are mostly allocated to data center GPUs already, and building new fabs requires large capital investments and highly specialized labor.

Data stands out as the most uncertain bottleneck, with its uncertainty range spanning four orders of magnitude. The utility of multimodal data for advancing reasoning capabilities may be limited, and our estimates of both the available stock of such data, its quality, and the efficiency of current tokenization methods are less certain than those for text-based data. Ultimately, synthetic data might enable scaling indefinitely, but at a large compute cost.

Lastly, while the latency wall is a distant constraint, it looms on the horizon as an obstacle to be navigated. It might be pushed back by adopting more complex network topologies, involving larger pods or more connections between pods.

Will labs attempt to scale to these new heights?

We find that, based on extrapolating current trends around the key AI bottlenecks, training runs of up to 2e29 FLOP will be possible by the end of this decade. Achieving this scale would be on-trend: The largest training runs to date have been of the order of 5e25 FLOP, and six more years of the historical trend of 4x/year would result in models trained using roughly 2e29 FLOP. The price tag of the cluster needed for such a training run will be on the order of hundreds of billions of dollars.103 Will the AI industry actually seek to train models of this scale?

To date, increasing the scale of AI models has consistently led to improved capabilities. This has instilled a scaling-focused view of AI development that has resulted in the amount of spending on training runs growing by around 2.5x/year. Early indications suggest that this is likely to continue. Notably, it has been reported that Microsoft and OpenAI are working on plans for a data center project known as “Stargate” that could cost as much as $100 billion, set to launch in 2028. This suggests that major tech companies are indeed preparing to achieve the immense scales we’re considering.

Further evidence of AI systems’ potential for sufficiently large economic returns could emerge from scaling beyond GPT-4 to a GPT-6 equivalent model, coupled with substantial algorithmic improvements and post-training improvements. This evidence might manifest as newer models like GPT-5 generating over $20 billion in revenue within their first year of release; significant advancements in AI functionality, allowing models to seamlessly integrate into existing workflows, manipulate browser windows or virtual machines, and operate independently in the background. We expect that such developments could convince AI labs and their backers of these systems’ immense potential value.

The potential payoff for AI that can automate a substantial portion of economic tasks is enormous. It’s plausible that an economy would invest trillions of dollars in building up its stock of compute-related capital, including data centers, semiconductor fabrication plants, and lithography machines. To understand the scale of this potential investment, consider that global labor compensation is approximately $60T per year. Even without factoring in accelerated economic growth from AI automation, if it becomes feasible to develop AI capable of effectively substituting for human labor, investing trillions of dollars to capture even a fraction of this $60T flow would be economically justified.

Standard economic models predict that if AI automation reaches a point where it replaces most or all human labor, economic growth could accelerate by tenfold or more. Over just a few decades, this accelerated growth could increase economic output by several orders of magnitude. Given this potential, achieving complete or near-complete automation earlier could be worth a substantial portion of global output. Recognizing this immense value, investors may redirect significant portions of their capital from traditional sectors into AI development and its essential infrastructure (energy production and distribution, semiconductor fabrication plants, data centers). This potential for unprecedented economic growth could drive trillions of dollars in investment in AI development.104

Settling the question of whether companies or governments will be ready to invest upwards of tens of billions of dollars in large scale training runs is ultimately outside the scope of this article. But we think it is at least plausible, which is why we’ve undertaken this analysis.

Conclusion

In this article, we estimated the maximum feasible scale of AI training runs by 2030 by analyzing the availability and potential constraints key factors required for scaling up training runs. We examined four categories of bottlenecks (power constraints, chip manufacturing capacity, data scarcity, and the latency wall) to determine at what point they might render larger training runs infeasible. Our main result: Based on current trends, training runs of up to 2e29 FLOP will be feasible by the end of this decade. In other words, it is likely feasible, by the end of the decade, for an AI lab to train a model that would exceed GPT-4 in scale to the same degree that GPT-4 eclipses GPT-2 in training compute.

One of the most likely reasons that training runs above these scales might be infeasible is the amount of power that can be supplied by the grid. Substantially expanding the data center power supply by 2030 may be challenging due to grid-level constraints, carbon commitments, and political factors.

The second key constraint is the limited capacity to manufacture tens of millions of H100-equivalent chips per year. Capacity might be limited if capital expenditure is not substantially accelerated through the next decade, even if the relevant manufacturing capacity is mostly dedicated to producing GPUs or other AI accelerators.

Overall, these constraints still permit AI labs to scale at 4x/year through this decade, but these present major challenges that will need to be addressed to continue progress.

If AI training runs of this scale actually occur, it would be of tremendous significance. AI might attract investment over hundreds of billions of dollars, becoming the largest technological project in the history of humankind. Sheer scale translates into more performance and generality, suggesting that we might see advances in AI by the end of the decade as major as what we have experienced since the beginning of the decade.

Finally, through our work we have grappled with the uncertainty we face in predicting the trajectory of AI technologies. Despite their importance, power restrictions and chip manufacturing stand out as uncertain. We will be investigating these in more depth in future work.